John Hatley

UX Designer

Environmental Health Overview Application

Migrating a windows-based application to the cloud and improving usability.

John Hatley

UX Designer

Migrating a windows-based application to the cloud and improving usability.

Basics

2019/2020

Figma, Axure

UX/UI Design

About

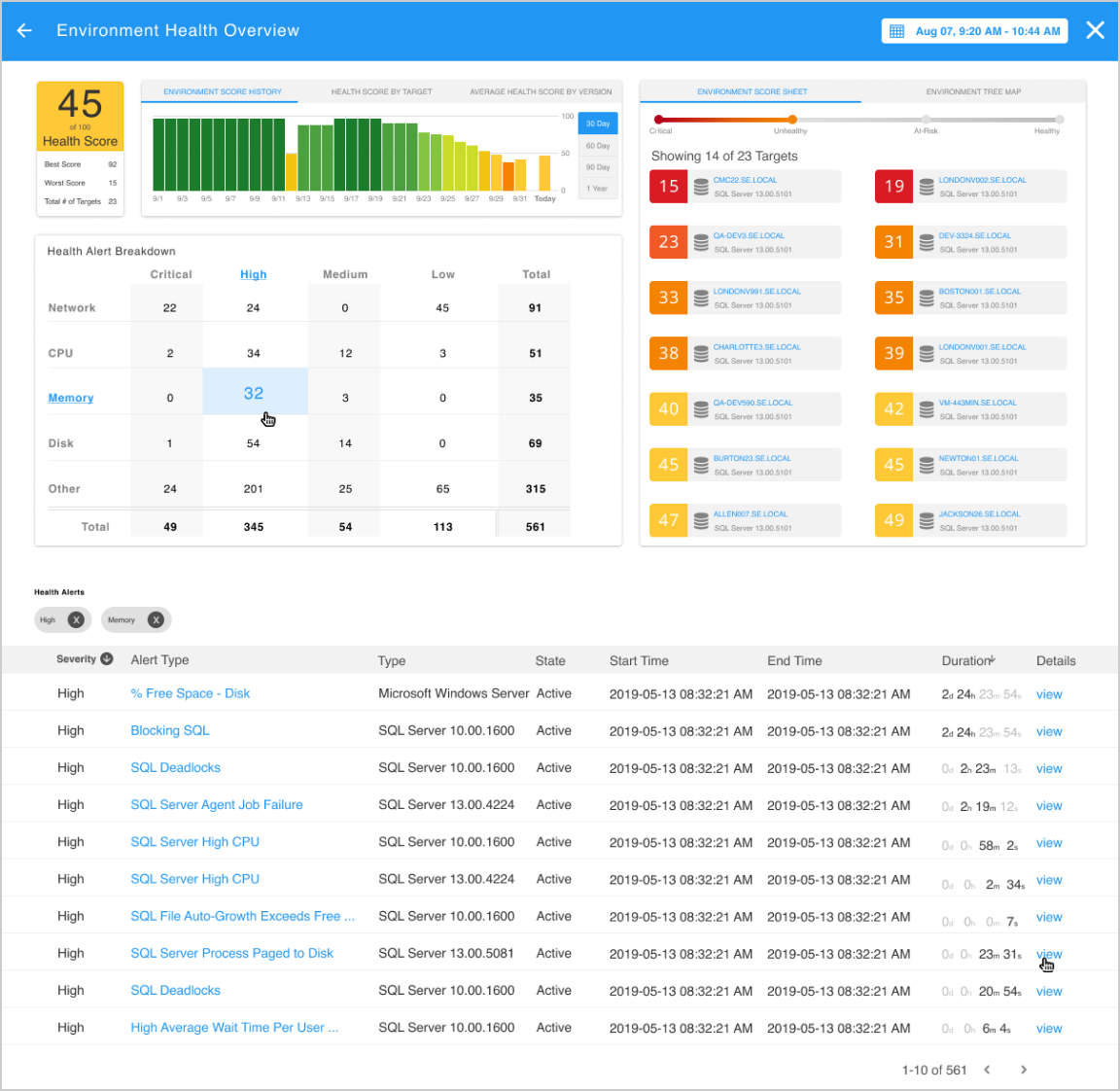

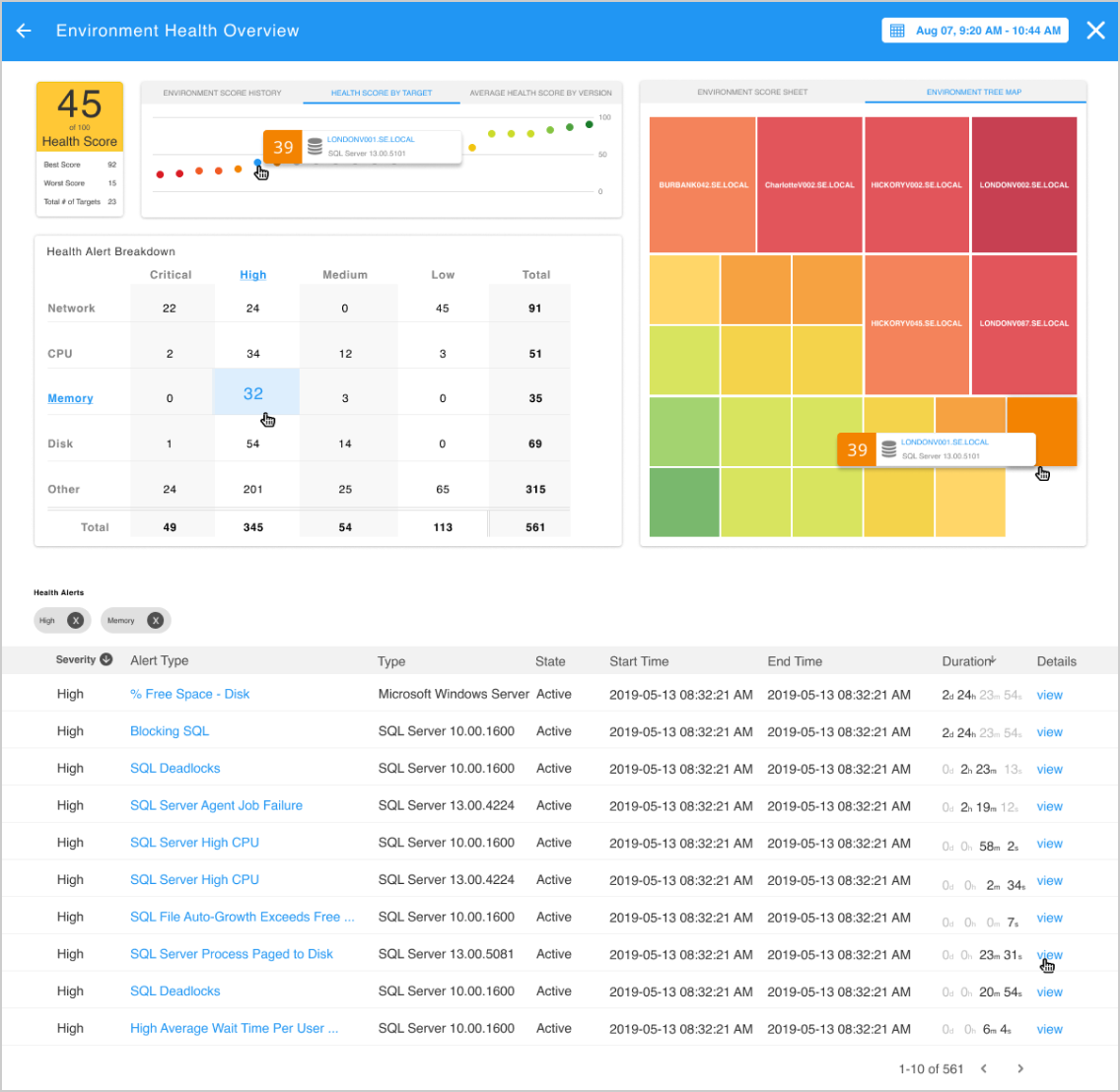

Provide new and existing clients proven diagnosis capabilities via a cloud-based application and improve user efficiency in diagnosing and resolving environmental issues.

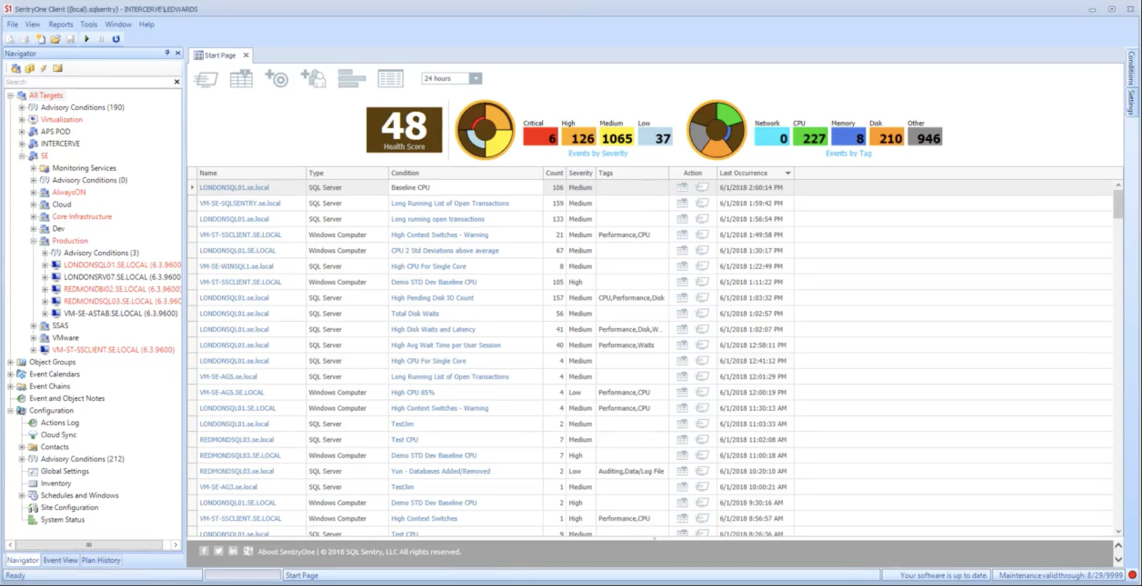

Replicate existing feature set into a cloud-based application, improve usability and reduce the learning curve.

My role was to lead the user research, perform holistic review of the existing application, deliver the UX and UI patterns, build a clickable prototype and perform end user testing and validation.

User Research

2 Groups of 5 - Existing Users/Free Agents

Experience with the Windows application ranged from 1 to 12 years.

DBA's, Developers and Generalists with 1-8 years of DB experience.

Findings & Collateral

Users identified several areas in the existing interface that were either confusing or missing:

These issues were common within most areas of the legacy application, as the application delivers mass amounts of grid-based data directly to the user by default.

In addition to the user input, I leaned on a few other information sources to help provide a complete picture prior to designing:

Solution

Conclusion

After the initial conceptual review with product management and stakeholders I created a clickable prototype with Axure in order to conduct user testing. As part of the stakeholder review I agreed to conduct A/B testing with a more traditional (read copy/paste) version of the legacy interface that leveraged a hiearchical grid. I created that prototype as well and tested both with the following conclusions: